Evaluating and Comparing Deep Models

To help evaluate Deep Learning segmentation quality, this software release features a model evaluation tool that lets you compare different models with selected metrics, such as binary cross entropy, mean absolute error, and Poisson for regression, as well as Jacard similarity, accuracy, and Dice for binary segmentation.

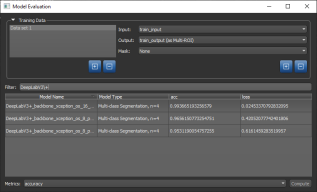

Choose Artificial Intelligence > Deep Learning Model Evaluation Tool on the menu bar to the open the Model Evaluation dialog, shown below.

Model Evaluation dialog

- Load the training set(s) that will provide the input(s) and output(s) for the evaluation, as well as any mask or masks that you intend to apply..

- Choose Artificial Intelligence > Deep Learning Model Evaluation Tool on the menu bar.

The Model Evaluation dialog appears.

- Choose the required input in the Input drop-down menu.

- If required, select the number of slices used to train the model(s) you want to evaluate. Enter '1' if you trained the model(s) in 2D and '3' or more if you trained the model(s) in 3D.

- If you selected a multi-slice model, choose a reference slice and spacing.

- Choose the required output in the Output drop-down menu.

All saved models that match the input and output criteria appear in the dialog. If required, you can filter the list by entering text in the Filter edit box.

- Choose a mask in the Mask drop-down menu, optional.

- If your model(s) was trained with multiple inputs, click the '+' button to add a new training dataset and then choose the required input int he Input drop-own menu.

All saved models that match the input and output criteria appear in the dialog. If required, you can filter the list by entering text in the Filter edit box.

- Select the model(s) that you want to evaluate.

- Choose the required metrics in the Metrics drop-down menu.

- Click the Compute button.